The Future of Life Institute has been working on AI governance-related issues for the last decade. We're already over 10 years old, and our mission is to steer very powerful technology away from large-scale harm and toward very beneficial outcomes. You could think about any kind of extreme risks from AI, all the way to existential or extinction risk, the worst kinds of risks and the benefits. You can think about any kind of large benefits that humans could achieve from technology, all the way through to utopia, right? Utopia is the biggest benefit you can get from technology. Historically, that has meant we have focused on climate change, for example, and the impact of climate change. We have also focused on bio-related risks, pandemics and nuclear security issues. If things go well, we will be able to avoid these really bad downsides in terms of existential risk, extinction risks, mass surveillance, and really disturbing futures. We can avoid that very harmful side of AI or technology, and we can achieve some of the benefits.

Today, we take a closer look at the future of artificial intelligence and the policies that determine its place in our societies. Risto Uuk is Head of EU Policy and Research at the Future of Life Institute in Brussels, and a philosopher and researcher at KU Leuven, where he studies the systemic risks posed by AI. He has worked with the World Economic Forum, the European Commission, and leading thinkers like Stuart Russell and Daniel Susskind. He also runs one of the most widely read newsletters on the EU AI Act. As this technology is transforming economies, politics, and human life itself, we’ll talk about the promises and dangers of AI, how Europe is trying to regulate it, and what it means to build safeguards for a technology that may be more powerful than anything we’ve seen before.

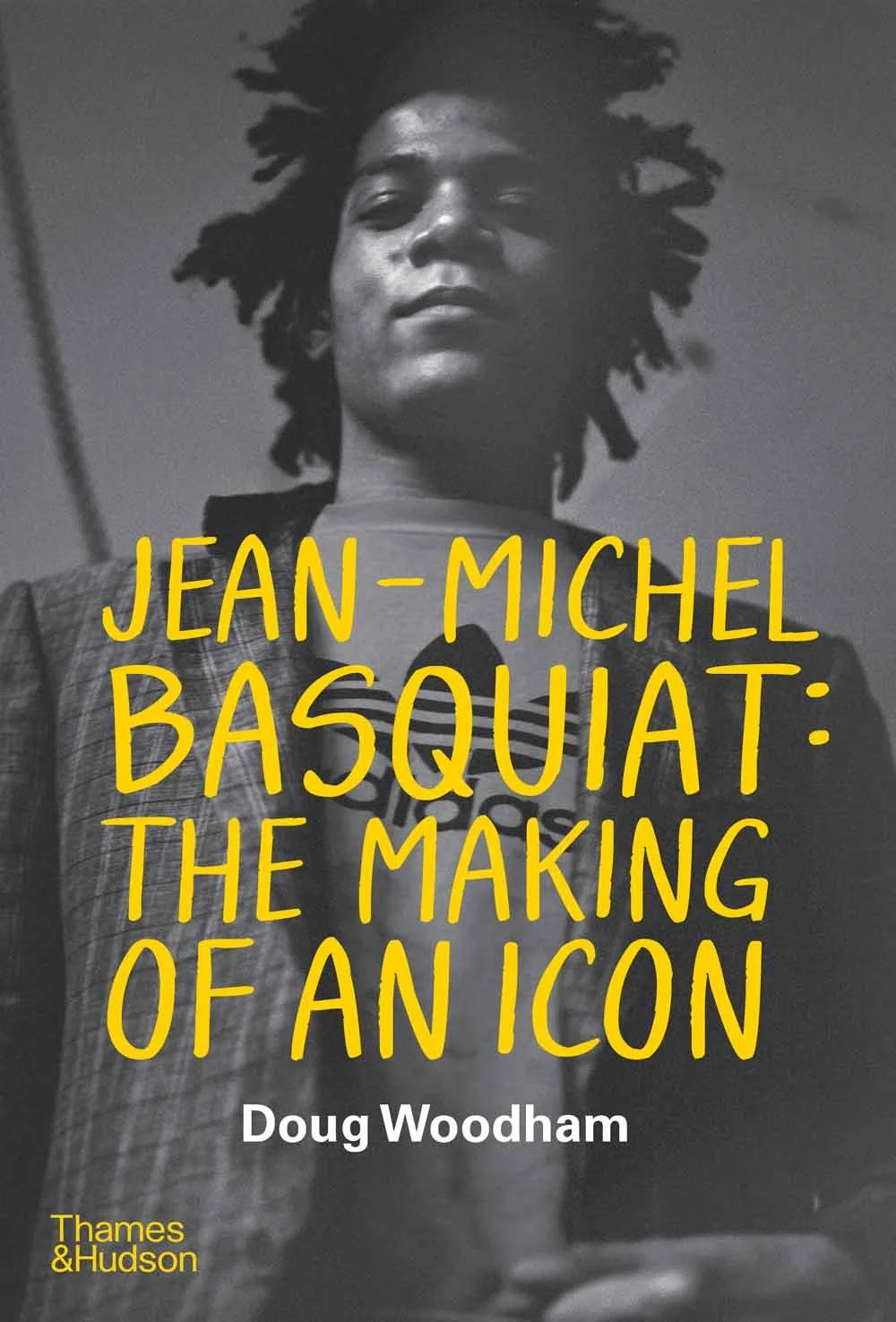

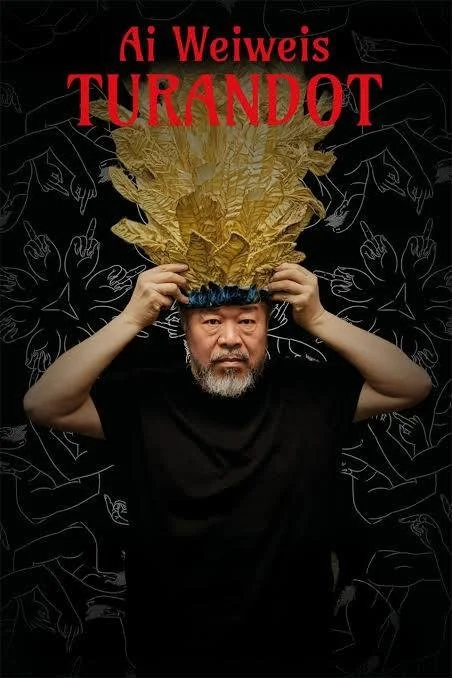

THE CREATIVE PROCESS

Risto, you're the Head of EU Policy and Research at the Future of Life Institute. What do you envisage for the future of life in the next 10, 20, 50 years?

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

Obviously, people talk about solving cancer, solving climate change, ensuring that we continue reducing poverty rates, increasing equality in the world, and just improving wellbeing and health significantly. We have been able to achieve a lot of success as a society in terms of all of those things.

I think people have become more educated, healthier, and more prosperous. All those things have improved with technology. However, sometimes we see really bad negatives as well, which indicate that technology is not inherently beneficial. We have to, as a society, direct it toward positive outcomes.

For example, people are concerned about mental health among teenagers due to social media consumption. People are worried about obesity rates and the increasing numbers of cancer rates. Even though we've achieved a lot of success in that area, it's still a massive issue. Regarding cardiovascular diseases, we have so many significant issues that we could make progress on, and technology could help with that. However, that's not guaranteed to happen because I think for the last 10 plus years, I've been personally quite worried that technology, in my view, could be seen not as enhancing our wellbeing or improving our lives, but rather as taking our attention and causing unnecessary disruption.

I'm worried about how some of these other values, rather than increasing welfare and wellbeing, could influence technological development. So, that's kind of a nutshell of some of my thoughts.

THE CREATIVE PROCESS

I couldn't agree more. Everyone, of course, quotes the possibility of finding novel health cures for cancer and other ailments. But if we can't flourish in other ways, if it takes our jobs and we're no longer seen as valuable, as you say, this does affect our mental health. We don't have that kind of pride in work and community, and it affects the food we eat.

I know that physical health is something important to you, which you've learned from your experiences. You didn't come from a technological background; philosophy was your aim before you trained in this area.

I think one of the most crucial issues of our time is that the benefits seem to come with a lot of challenges, and that's why governance is really important. We need to implement the regulations and guardrails we put in place, which is another significant obstacle.

Just tell us a little about your background. What brought you first to philosophy and then to this concern for the impacts of technology on society?

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

In primary school, I was very interested in learning and was a great student. I competed in math Olympiads and was one of the top students. I was really curious about all kinds of fields. After my sixth grade, I studied at one of the top schools in Estonia, and there, something changed. I became much less interested in learning.

For some reason, the education had quite a negative impact on my curiosity and my interest in understanding things. Maybe the learning was too demanding. I was in a math and science class, so perhaps the workload was just too much. Another factor was that I felt forced to learn certain things without enough space to study on my own. So, really, throughout my high school, I didn't spend much effort on studying. I didn't care very much about it.

My choice of university degree after high school was completely random. I just chose business and economics without making a conscious decision that it was the best thing to do. Very soon, I realized that was a mistake because it was just a continuation of the same experience I had in high school, which was rote memorization—trying to remember everything, cramming for exams, finishing those exams. I felt I wasn't actually learning anything.

When I decided to drop out of university, I wrote a blog post titled "Why I Dropped Out of University." This turned out to be extremely popular; it was read by over 50,000 Estonians. Estonia is very small, with about a million people, so at one point, nearly 10% of the population had read my blog post. Even today, over a decade later, I still receive emails from people expressing gratitude for that post because they felt pressured by society to choose random university degrees without reflecting on their choices.

In any case, I dropped out and pursued my earlier passion as a personal trainer. For over five years, I built a career in health and fitness and nutrition, helping people improve their lives through training, nutrition, and lifestyle habits. My aim was to help them lose fat, improve their body composition, and become healthier.

My approach was a bit different from other personal trainers because I spent a lot of time reading scientific evidence on training and nutrition. I was quite evidence-based and scientifically minded. I utilized motivational interviewing, which means I wouldn’t just tell my clients to eat five pieces of fruit and vegetables per day. Instead, I would ask them questions, guiding them to realize what they should be doing with their time. This way, clients often came up with their own ideas, making them more likely to maintain those habits.

Eventually, I felt compelled by arguments that I wasn't doing enough to impact the world. I asked myself what would happen if I didn't take on certain clients. I realized that these clients would likely find other trainers. I couldn't convince myself that I was significantly better than others, especially considering the challenge of helping people lose weight. Scientific evidence shows that most people who lose a substantial amount of body weight regain it within two years—90% or more.

Currently, we're seeing that some weight-loss drugs may be more effective than trainers. I lost my drive in that career because I couldn't see how I could make a meaningful difference. I started exploring other fields, such as animal welfare, improving lives for animals in factory farms, global poverty, and climate change. I tested my fit in those areas, starting various projects related to research, running nonprofits, and organizing events to find where my interests truly lay.

I eventually worked as a science editor for Estonian Public Broadcasting, covering various topics, including AI issues. I found AI pressing and interesting, and I felt I could contribute. I participated in an AI safety camp focused on research projects related to AI safety issues. I ended up researching the AI policymaking process, exploring how learnings from political science could be applied to AI. My paper got accepted in a peer-reviewed journal, which encouraged me.

I then decided to return to university for an undergraduate degree in philosophy and economics to think critically about living life. I wanted to explore how to organize political institutions and understand knowledge, as philosophy would provide deeper answers about societal decisions. Economics would help allocate scarce resources to achieve those ends, emphasizing a combination of both disciplines.

THE CREATIVE PROCESS

If you want to become a plumber, I think you're probably safe for the future. Maybe chefs, too, though I'm not entirely sure; I’ve seen some robotic chefs already. But what's interesting is...

I know you've worked with economists and writer Daniel Susskind. When I interviewed him, he was skeptical about AI and advocated for safeguards. Like you, he also mentioned his children, reading them bedtime stories, and using AI to help create those stories. His children even come up with their own prompts, which encourages them to be inventive and creative at a young age, perhaps even before they could write those stories on their own.

It's a kind of co-creation. The kids have ideas, collaborate with AI, and together they make their bedtime stories. That's a wonderful way to use AI to nurture creativity and imaginative thinking. I'm really open to that kind of collaboration.

On another note, regarding artists, I would love to see a universal basic income for them. Artists play a crucial role in society, showing us new ways of seeing and thinking, yet many live from hand to mouth. Their work has often been scraped without their consent. They've been marginalized, seen as wishful thinkers rather than voices of concern.

It's easier to support mega stars who are already doing well economically, but for the majority of artists, how could such a system work in practice? I'd really like to hear your thoughts on that.

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

Absolutely. There are good reasons to be skeptical of the feasibility of universal basic income because, even issues like corporations paying enough taxes have been extremely challenging. A study I read indicated that about $600 billion is avoided each year in taxes by major companies. Many corporations move their headquarters to tax havens to avoid taxes.

Even achieving a global minimum tax has been a long, hard battle. It's reasonable to be skeptical about universal basic income, especially since it would be extremely expensive. Many would complain about that. Even paying parents a certain amount each month has been a significant challenge.

For example, in the U.S., people look to the EU for having achieved robust social safety nets, but in the U.S., that's been tough going. During the COVID period, some measures were put in place, but after the pandemic ended, those payments were discontinued.

These kinds of initiatives are hard to achieve. I don't know what the best solutions might be, but some have proposed licenses for creatives. AI developers and companies would have to obtain a license to train AI models on artists' content rather than just scraping it without consent.

But the space around AI copyright and how to use artists' materials is very challenging. Many lawsuits are ongoing, with numerous challenges arising.

THE CREATIVE PROCESS

Honestly, everyone likes free things, but the apps and tools I pay for are the ones I really use. Usually, paid options are geared toward professionals, while casual users stick with free versions unless they see essential functionality limitations in the free model.

If a tool is too slow or inefficient, people may upgrade to a paid version, which often involves licensing. However, we haven't seen that kind of respect or structure from major AI players. Historically, companies like Google invested heavily in research and model training, but the landscape is so different now.

How do you see the EU AI Act shaping things, especially with Europe taking the lead in AI governance? How might it ripple out to the U.S. and China? How does it compare to what those regions are doing, and could it encourage a more responsible global approach to AI governance?

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

There’s a concept called the Brussels effect, which is the idea that when the EU regulates technology or any other area, that impacts the rest of the world in terms of companies adapting their practices to meet EU demands or even governments adopting similar regulations.

Currently, there's strong skepticism surrounding the possibility of the U.S. government copying anything from the EU. However, I am less skeptical of the concept because, during a recent research visit to the Stanford Digital Economy Lab, I observed firsthand how AI companies in California are paying attention to the EU AI Act and modifying their risk assessment practices to comply with it.

You saw that with the EU Code of Practice finalized in July this year, which essentially provides guidelines for companies to comply with the EU AI Act general-purpose AI rules. Most big tech companies in the U.S. signed the code of practice, indicating a desire to comply.

So far, it appears that there is a significant shift in terms of companies altering their practices because the EU market is very important for these tech companies. For many of them, the EU market might even be more attractive than the U.S. market due to competition.

There also seems to be some influence from the EU AI Act on other countries' governments. For example, South Korea recently introduced their own AI law; Vietnam has followed suit; and Brazil is finalizing its AI law. Several states in the U.S. are considering laws similar to this, like Colorado, but while they might be inspired by the EU AI Act, they won’t copy it exactly.

The EU definitely has a huge impact on many other countries in the world.

THE CREATIVE PROCESS

You mentioned Stanford, which we collaborate with on a podcast and various episodes. You are at KU Leuven, where we brought The Creative Process exhibition in collaboration with the European Conference on Humanities. Education is at the forefront of our minds.

What implications do you see for the future of education with AI? How is it affecting our critical thinking abilities? You talked about coming up in an environment where effort mattered. Machines can do things for us, but there's personal satisfaction in doing things ourselves. What should we be thinking about regarding the future of education and AI?

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

Great question. I'm quite worried about the impact of AI on education and think we should reflect on it thoroughly.

Recently, I came across an interesting example on social media from an undergraduate student at UC Berkeley. They stated that since they started using tools like ChatGPT, their curiosity about the world diminished. They became more focused on generating outputs rather than making mistakes and growing. I found that perspective fascinating because I tend to feel that way too.

THE CREATIVE PROCESS

I really want to see the EU AI Act succeed. Of course, there will always be flaws and amendments, but it’s great to have this Brussels ripple effect, especially within the global sphere.

If not all countries comply or sign on, there are concerns about loopholes being created. AI companies accessible in the EU might not comply. How do we protect ourselves against this? It’s akin to a sieve—blocking the holes in our programs, yet still potentially affecting our populations as they source AI products originating outside the EU. Is there a kind of labeling system to identify ethically produced AI? How do we inform the public?

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

The obvious answer is that we should collaborate more internationally. The EU should not just operate within its boundaries, even though the EU, with over 400 million people, represents a massive market that matters significantly.

Still, there are billions of people globally, and the EU should engage with other countries, raising awareness about what the EU AI Act entails. There is a lot of misinformation surrounding it, which is why we established the EU Act website and produce newsletters to inform as many people as possible.

It's essential for the EU to collaborate with other countries to find what makes sense for them. Some level of experimentation is reasonable; not every country needs to copy the EU's approach without deliberation.

Some aspects of the EU's initiatives may not always be positive or effective; they should also evolve based on evidence regarding the effectiveness of the measures put forth. Other countries should also experiment independently and adapt provisions from the EU or other jurisdictions.

Converging as much as possible is important because it provides legal certainty for businesses operating globally. Companies struggle when faced with entirely different laws in various regions, so whenever feasible, we should aim to harmonize regulations, though we cannot do this indefinitely due to cultural differences and citizens' differing values.

THE CREATIVE PROCESS

Regionally, there are European values we need to safeguard. In closing, as you look to the future and the importance of having human-centered AI, the significance of the arts and humanities, and human-authored creativity, what would you like young people to remember and preserve?

RISTO UUK · THE FUTURE OF LIFE INSTITUTE

Great question. Young people face the challenge of navigating the age of AI. It's essential for them to experiment with AI and become knowledgeable about it. Interpersonal skills will become increasingly important, despite the potential for AI to perform many tasks as well or better.

Society might decide at some point that we don’t want to delegate everything to AI. Perhaps we prefer certain experiences to remain human, such as enjoying listening to two less-than-perfect individuals engaging in conversation.

As we navigate this age of AI, I hope young people develop meaningful interpersonal skills and abilities to interact in physical spaces. Writing and thinking exercises are still crucial; focusing on these tasks can improve one's skills.

My advice is to embrace the process of thinking and writing without defaulting to AI. After reflecting and honing these skills, young people can then use AI to amplify their writing and thinking later. Those would be some key takeaways for younger people navigating the AI landscape.